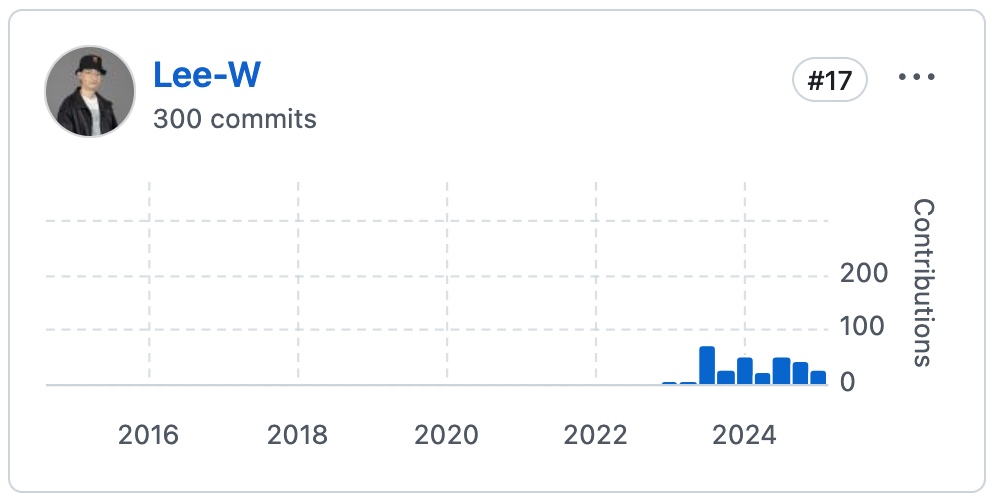

Following up on Achieve 200 contributions in Apache Airflow.

Just a quick reflection on what I did between 200 and 300 contributions.

It seems that most of the PRs still relate to Dataset/Asset. I thought I had spent more time on AIP-72 and AIP-83, but perhaps those are PRs created by others that I took over. Or maybe my memory is just failing me...

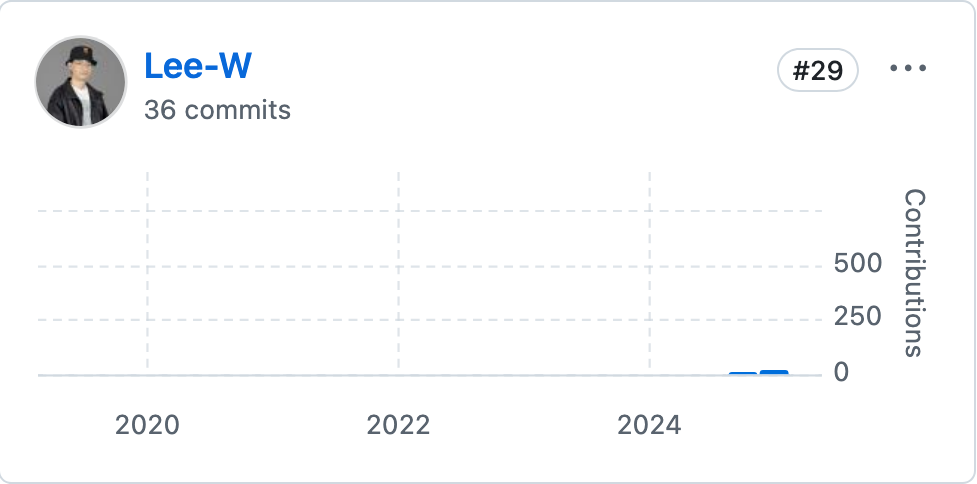

In addition to the Airflow repo itself, I also spent a decent amount of time contributing to ruff - Airflow rules, which makes me the 29th contributor to ruff. It’s kind of weird, considering that I know almost nothing about Rust.

Dataset / Asset Alias

- set "has_outlet_datasets" to true if "dataset alias" exists

- Add dataset alias unique constraint and remove wrong dataset alias removing logic

- docs(dataset): illustrate when dataset aliases are resolved

- fix DagPriorityParsingRequest unique constraint error when dataset aliases are resolved into new datasets

- fix(dag): avoid getting dataset next run info for unresolved dataset alias

- allow dataset alias to add more than one dataset events

Dataset / Asset

- Add DatasetDagRunQueue to all the consuming DAGs of a dataset alias

- Fix dataset page cannot correctly load triggered dag runs due to lack of dagId

- Show only the source on the consumer DAG page and only triggered DAG run in the producer DAG page

- fix wrong link to the source DAG in consumer DAG's dataset event section

- feat(datasets): make strict_dataset_uri_validation default to True

- Rewrite how dag to dataset / dataset alias are stored

- Rewrite how DAG to dataset / dataset alias are stored

- fix(datasets/managers): fix error handling file loc when dataset alias resolved into new datasets

AIP-74, 75 - Data Asset and Asset Centric Syntax

- Rename dataset related python variable names to asset

- Rename Dataset database tables as Asset

- Rename dataset endpoints as asset endpoints

- fix(assets/managers): fix error handling file loc when asset alias resolved into new assets

- Rename dataset as asset in UI

- feat(providers/amazon): Use asset in common provider

- feat(providers/openlineage): Use asset in common provider

- feat(providers/fab): Use asset in common provider

- Add Dataset, Model asset subclasses

- fix(migration): fix dataset to asset migration typo

- Fix AIP-74 migration errors

- fix typo in dag_schedule_dataset_alias_reference migration file

- add migration file to rename dag_schedule_dataset_alias_reference constraint typo

- Resolve warning in Dataset Alias migration

- fix(providers/fab): alias is_authorized_dataset to is_authorized_asset

- fix(providers/amazon): alias is_authorized_dataset to is_authorized_asset

- remove the to-write asset active dag warnings that already exists in the db instead of those that does not exist

- Move Asset user facing components to task_sdk

- Add missing attribute "name" and "group" for Asset and "group" for AssetAlias in serialization, api and methods

- fix(scheduler_job_runner/asset): fix how asset dag warning is added

- Raise deprecation warning when accessing inlet or outlet events through str

- feat(dataset): allow "airflow.dataset.metadata.Metadata" import for backward compat

- feat(datasets): add backward compat for DatasetAll, DatasetAny, expand_alias_to_datasets and DatasetAliasEvent

- Respect Asset.name when accessing inlet and outlet events

- fix(providers/common/compat): add back add_input_dataset and add_output_dataset to NoOpCollector

- Raise deprecation warning when accessing metadata through str

- Fail a task if an inlet or outlet asset is inactive or an inactive asset is added to an asset alias

- feat(asset): change asset inactive warning to log Asset instead of AssetModel

- Combine asset events fetching logic into one SQL query and clean up unnecessary asset-triggered dag data

AIP-72 - Task Execution Interface

Tooling for migrating Airflow 2 to 3

- ci(github-actions): add uv to news-fragment action

- docs(newsfragement): fix typos in 41762, 42060 and remove unnecessary 41814

- docs(newsfragment): these deprecated things are functions instead of arguments

- docs(newsfragment): add template for significant newsfragments

- feat(cli): add "core.task_runner" and "core.enable_xcom_pickling" to unsupported config check to command "airflow config lint"

- Update existing significant newsfragments with the later introduced template format

- Extend and fix "airflow config lint" rules

- Backport "airflow config lint"

- Add newsfragment and migration rules for scheduler.dag_dir_list_interval → dag_bundles.refresh_interval configuration change

- Add missing significant newsfragments and migration rules needed

- ci(github-actions): add a script to check significant newsfragments

- docs(newsfragment): add significant newsfragment to PR 42252

- ci(github-actions): relax docutils version to support python 3.8

- docs(newsfragments): update migration rules status

- fix(task_sdk): add missing type column to TIRuntimeCheckPayload

- docs(newsfragments): update 46572 newsfrgments content

- Fix significant format and update the checking script

- feat: migrate new config rules back to v2-10-test

- docs(newsfragments): update migration rules in newsfragments

AIP-83 amendment - Restore uniqueness for logical_date while allowing it to be nullable

Providers

- add missing sync_hook_class to CloudDataTransferServiceAsyncHook

- fix test_yandex_lockbox_secret_backend_get_connection_from_json by removing non-json extra

- handle ClientError raised after key is missing during DyanmoDB table.get_item

- fix(providers/common/sql): add dummy connection setter for backward compatibility

- feat(providers/common/sql): add warning to connection setter

- fix(providers/databricks): remove additional argument passed to repair_run

- fix(provider/edge): add back missing method map

- docs(newsfragments): fix typo and improve significant newfragment template

Misc

- fix(TriggeredDagRuns): fix wrong link in triggered dag run

- Return string representation if XComArgs existing during resolving and include_xcom is set to False

- Allowing DateTimeSensorAsync, FileSensor and TimeSensorAsync to start execution from trigger during dynamic task mapping

- refactor how triggered dag run url is replaced

- Change inserted airflow version of "update-migration-references" command from airflow_version='...' to airflow_version="..."

- Fix missing source link for the mapped task with index 0

- remove the removed --use-migration-files argument of "airflow db reset" command in run_generate_migration.sh

- docs(deferring): fix missing import in example and remove unnecessary example

- Set end_date and duration for triggers completed with end_from_trigger as True

- ci: improve check_deferrable_default script to cover positional variables

- ci: improve check_deferrable_default script to cover positional variables

- Add warning that listeners can be dangerous

- ci: auto fix default_deferrable value with LibCST

- ci(pre-commit): lower minimum libcst version to 1.1.0 for python 3.8 support

- Autofix default deferrable with LibCST

- add "enable_tracemalloc" to log memory usage in scheduler

- ci(pre-commit): migrate pre-commit config

- fix(dag_warning): rename argument error_type as warning_type

- Add newsfragment PR 43393

- refactor(trigger_rule): remove deprecated NONE_FAILED_OR_SKIPPED

- Ensure check_query_exists returns a bool (#43978)

Okay, so that's it. If I ever have such a summary, the next time I'll start with feat(api_fastapi): include asset ID in asset nodes when calling "/ui/dependencies" and "/ui/structure/structure_data" #47381.