This is more like a personal reflection, and I really doubt it would benefit anyone. But it's my blog anyway. I can write whatever I want, lol.

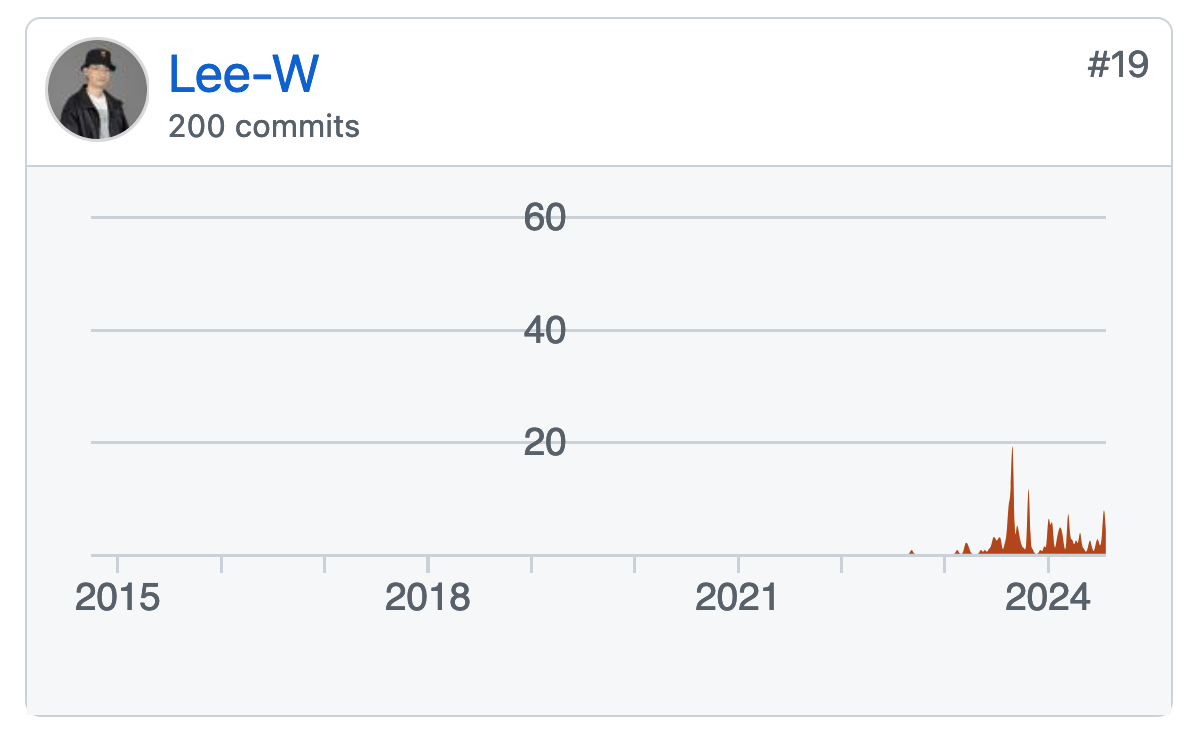

There are actually 202 now, but I have the screenshot when it achieved 200, so I'll just keep 200 in the title.

The merged pull request is at 166 (+2). I am unsure about the remaining 34 contributions, perhaps due to the suggestions I provided for the PRs I reviewed. There are 2 PRs in review.

- Add dataset alias unique constraint and remove wrong dataset alias removing logic

- set "has_outlet_datasets" to true if "dataset alias" exists

200 contributions seem like a good opportunity to reflect on what I have done on the Airflow project since I joined Astronomer. There might be typos in the PR title, but I'll keep it as it is. I try to group related things into subgroups, and there might be things that cannot be easily categorized. I'm just putting it in the "misc" section.

The count of PRs might appear to be higher than the value I added (and it actually is). This is due to my development habits. Whenever possible, I prefer to keep the commits small and clean. It's easier to revert if I did something dumb and wrong. However, I must admit I probably created too many PRs for the Azure managed identity feature. Ideally, the feature PRs should include documentation updates as well. But yep, I was eager to land the feature first, then. It also suggested having separate PRs for each airflow provider even if it's basically the same feature.

- After joining Astronomer

- Add "DatasetAlias" for creating datasets or dataset events in runtime

- Start task execution directly from the trigger

- Add REST API endpoint to manipulate queued dataset events

- Upgrade apache-airflow-providers-weaviate to 2.0.0 for weaviate-client >= 4.4.0 support

- Improve trigger stability by adding "return" after "yield"

- Contribute astronomer-providers functionality to apache-airflow providers

- Add Azure managed identities support to apache-airflow-providers-microsoft-azure

- Make all existing sensors respect the "soft_fail" argument in BaseSensorOperator

- Add defult_deferrable configuration for easily turning on the deferrable mode of operators

- Security improvement

- Misc (core)

- Misc (provider)

- Misc (doc only)

- Before Joining Astronomer

After joining Astronomer

Add "DatasetAlias" for creating datasets or dataset events in runtime

- Check dataset_alias in inlets when use it to retrieve inlet_evnets

- Add string representation to dataset alias

- add example dag for dataset_alias

- add test case test_dag_deps_datasets_with_duplicate_dataset

- Extend dataset dependencies

- Extend get datasets endpoint to include dataset aliases

- Retrieve inlet dataset events through dataset aliases

- Link dataset event to dataset alias

- Scheduling based on dataset aliases

- Add DatasetAlias to support dynamic Dataset Event Emission and Dataset Creation

Start task execution directly from the trigger

- fix: add argument include_xcom in method rsolve an optional value

- Add start execution from trigger support for existing core sensors

- Enhance start_trigger_args serialization

- State the limitation of the newly added start execution from trigger feature

- add next_kwargs to StartTriggerArgs

- Add start execution from triggerer support to dynamic task mapping

- Prevent start trigger initialization in scheduler

- Starts execution directly from triggerer without going to worker (PR of the month)

Add REST API endpoint to manipulate queued dataset events

- add section "Manipulating queued dataset events through REST API"

- add "queuedEvent" endpoint to get/delete DatasetDagRunQueue

Upgrade apache-airflow-providers-weaviate to 2.0.0 for weaviate-client >= 4.4.0 support

- extract collection_name from system tests and make them unique

- fix weaviate system tests

- Upgrade to weaviate-client to v4

Improve trigger stability by adding "return" after "yield"

- add "return" statement to "yield" within a while loop in amazon triggers

- add "return" statement to "yield" within a while loop in dbt triggers

- add "return" statement to "yield" within a while loop in google triggers

- add "return" statement to "yield" within a while loop in azure triggers

- add "return" statement to "yield" within a while loop in http triggers

- add "return" statement to "yield" within a while loop in sftp triggers

- add "return" statement to "yield" within a while loop in airbyte triggers

- add "return" statement to "yield" within a while loop in core triggers

- retrieve dataset event created through RESTful API when creating dag run

Contribute astronomer-providers functionality to apache-airflow providers

- add repair_run support to DatabricksRunNowOperator in deferrable mode

- remove redundant else block in DatabricksExecutionTrigger

- add reuse_existing_run for allowing DbtCloudRunJobOperator to reuse existing run

- fix how GKEPodAsyncHook.service_file_as_context is used

- add service_file support to GKEPodAsyncHook

- reword GoogleBaseHookAsync as GoogleBaseAsyncHook in docstring

- add WasbPrefixSensorTrigger params breaking change to azure provider changelog

- style(providers/google): improve BigQueryInsertJobOperator type hinting

- Check cluster state before defer Dataproc operators to trigger

- Fix WasbPrefixSensor arg inconsistency between sync and async mode

- avoid retrying after KubernetesPodOperator has been marked as failed

- check sagemaker training job status before deferring SageMakerTrainingOperator

- check transform job status before deferring SageMakerTransformOperator

- check ProcessingJobStatus status before deferring SageMakerProcessingOperator

- add deferrable mode to RedshiftDataOperator

- add use_regex argument for allowing S3KeySensor to check s3 keys with regular expression

- add deferrable mode to RedshiftClusterSensor

- check job_status before BatchOperator execute in deferrable mode

- remove event['message'] call in EmrContainerOperator.execute_complete|as the key message no longer exists

- Check redshift cluster state before deferring to triggerer

- handle tzinfo in S3Hook.is_keys_unchanged_async

- add type annotations to Amazon provider "execute_coplete" methods

- iterate through blobs before checking prefixes

Add Azure managed identities support to apache-airflow-providers-microsoft-azure

- setting use_async=True for get_async_default_azure_credential

- add managed identity support to AsyncDefaultAzureCredential

- Refactor azure managed identity

- add managed identity support to fileshare hook

- add managed identity support to synapse hook

- add managed identity support to azure datalake hook

- add managed identity support to azure batch hook

- add managed identity support to wasb hook

- add managed identity support to adx hook

- add managed identity support to asb hook

- add managed identity support to azure cosmos hook

- add managed identity support to azure data factory hook

- add managed identity support to azure container volume hook

- add managed identity support to azure container registry hook

- add managed identity support to azure container instance hook

- Reuse get_default_azure_credential method from Azure utils for Azure key valut

- make DefaultAzureCredential configurable in AzureKeyVaultBackend

- Make DefaultAzureCredential in AzureBaseHook configuration

- docs(providers/microsoft): improve documentation for AzureContainerVolumeHook DefaultAzureCredential support

- docs(providers/microsoft): improve documentation for WasbHook DefaultAzureCredential support

- docs(providers/microsoft): improve documentation for AzureCosmosDBHook DefaultAzureCredential support

- docs(providers/microsoft): improve documentation for AzureFileShareHook DefaultAzureCredential support

- docs(providers/microsoft): improve documentation for AzureBatchHook DefaultAzureCredential support

- docs(providers/microsoft): improve documentation for AzureBaseHook DefaultAzureCredential support

- docs(providers/microsoft): improve documentation for Azure Service Bus hooks DefaultAzureCredential support

- docs(providers/microsoft): improve documentation for AzureDataExplorerHook DefaultAzureCredential support

- docs(providers/microsoft): improve documentation for AzureDataLakeStorageV2Hook DefaultAzureCredential support

- docs(providers/microsoft): improve documentation for AzureDataLakeHook DefaultAzureCredential support

- docs(providers/microsoft): improve documentation for AzureContainerRegistryHook DefaultAzureCredential support

- feat(providers/microsoft): add AzureContainerInstancesOperator.volume as a template field

- test(providers/microsfot): add system test for AzureContainerVolumeHook and AzureContainerRegistryHook

- docs(providers): replace markdown style link with rst style link for amazon and apache-beam

- test(providers/microsoft): add test cases to AzureContainerInstanceHook

- Add DefaultAzureCredential support to AzureContainerRegistryHook

- feat(providers/microsoft): add DefaultAzureCredential support to AzureContainerVolumeHook

- Add AzureBatchOperator example

- test(providers/microsoft): add test case for AzureIdentityCredentialAdapter.signed_session

- fix(providers/azure): remove json.dumps when querying AzureCosmosDBHook

- feat(providers/azure): allow passing fully_qualified_namespace and credential to initialize Azure Service Bus Client

- feat(providers/microsoft): add DefaultAzureCredential support to AzureBatchHook

- feat(providers/microsoft): add DefaultAzureCredential support to AzureContainerInstanceHook

- feat(providers/microsoft): add DefaultAzureCredential support to cosmos

- feat(providers/microsoft): add DefaultAzureCredential to data_lake

Make all existing sensors respect the "soft_fail" argument in BaseSensorOperator

- respect soft_fail argument when exception is raised for google sensors

- respect soft_fail argument when exception is raised for microsoft-azure sensors

- respect soft_fail argument when exception is raised for flink sensors

- respect soft_fail argument when exception is raised for jenkins sensors

- respect soft_fail argument when exception is raised for celery sensors

- Fix inaccurate test case names in providers

- respect soft_fail argument when exception is raised for datadog sensors

- respect soft_fail argument when exception is raised for http sensors

- respect soft_fail argument when exception is raised for sql sensors

- respect soft_fail argument when exception is raised for sftp sensors

- respect soft_fail argument when exception is raised for spark-kubernetes sensors

- respect soft_fail argument when exception is raised for google-marketing-platform sensors

- respect soft_fail argument when exception is raised for dbt sensors

- respect soft_fail argument when exception is raised for tableau sensors

- respect soft_fail argument when exception is raised for ftp sensors

- respect soft_fail argument when exception is raised for alibaba sensors

- respect soft_fail argument when exception is raised for airbyte sensors

- respect soft_fail argument when exception is raised for amazon sensors

- respect "soft_fail" argument when running BatchSensor in deferrable mode

- Respect "soft_fail" for core async sensors

- Respect "soft_fail" argument when "poke" is called

- respect soft_fail argument when ExternalTaskSensor runs in deferrable mode

Add defult_deferrable configuration for easily turning on the deferrable mode of operators

- build(pre-commit): add list of supported deferrable operators to doc

- build(pre-commit): check deferrable default value

- Add default_deferrable config (PR of the month)

Security improvement

- Disable rendering for doc_md

- check whether AUTH_ROLE_PUBLIC is set in check_authentication

- check whether AUTH_ROLE_PUBLIC is set in check_authentication

- fix(api_connexion): handle the cases that webserver.expose_config is set to "non-sensitive-only" instead of boolean value

Misc (core)

- catch sentry flush if exception happens in _execute_in_fork finally block

- add PID and return code to _execute_in_fork logging

- add missing conn_id to string representation of ObjectStoragePath

- Enable "airflow tasks test" to run deferrable operator

- remove "to backfill" from --task-regex argument help message

- fix(sensors): move trigger initialization from __init___ to execute

- Ship zombie info

- Catch the exception that triggerer initialization failed

- feat(jobs/triggerer_job_runner): add triggerer canceled log

- fixing circular import error in providers caused by airflow version check

Misc (provider)

- add default gcp_conn_id to GoogleBaseAsyncHook

- remove unexpected argument pod in read_namespaced_pod_log call

- fix wrong payload set when reuse_existing_run set to True in DbtCloudRunJobOperator

- migrate to dbt v3 api for project endpoints

- Replace pod_manager.read_pod_logs with client.read_namespaced_pod_log in KubernetesPodOperator._write_logs

- allow providing credentials through keyword argument in AzureKeyVaultBackend

- Fix outdated test name and description in BatchSensor

- add deprecation warning to DATAPROC_JOB_LOG_LINK

- Alias

DATAPROC_JOB_LOG_LINKtoDATAPROC_JOB_LINK - Remove execute function of

DatabricksRunNowDeferrableOperator - Add missing execute_complete method for

DatabricksRunNowOperator - refresh connection if an exception is caught in "AzureDataFactory"

- feat(providers/azure): cancel pipeline if unexpected exception caught

- fix(providers/amazon): handle missing LogUri in emr describe_cluster API response

- merge AzureDataFactoryPipelineRunStatusAsyncSensor to AzureDataFactoryPipelineRunStatusSensor

- merge BigQueryTableExistenceAsyncSensor into BigQueryTableExistenceSensor

- Merge BigQueryTableExistencePartitionAsyncSensor into BigQueryTableExistencePartitionSensor

- Merge DbtCloudJobRunAsyncSensor logic to DbtCloudJobRunSensor

- Merge GCSObjectExistenceAsyncSensor logic to GCSObjectExistenceSensor

Misc (doc only)

- Add in Trove classifiers Python 3.12 support

- add Wei Lee to committer list (This is my 133rd PR)

- Erd generating doc improvement

- fix rst code block format

- docs(core-airflow): replace markdown style link with rst style link

- docs(CONTRIBUTING): replace markdown style link with rst style link

- docs: fix partial doc reference error due to missing space

- docs(deferring): add type annotation to code examples

- add a note that we'll need to restart triggerer to reflect any trigger change